Project | 01

JustDeliver Experiment:

JustDeliver, an online delivery service, ran an experiment on courier pay. The goal of the experiment is to better distribute the budget available for courier pay, while decreasing the number of offers rejected by coruiers and improving customer experience.

I examined from discovery to prediction on the experiment. From discovery & analytics, transition to statistical modeling, and advancing to machine learning.

Language: Python

Models: Poisson model, Negative Binomial Distribution, Log-Linear Regression, Logistic Regression, Random Forest, and XGBoost

Project | 02

Home Value Index (HVI) risk reduction leveraging random pools

Home Diversification Corporation is to make residential homeownership less risky by diversifying and reducing your home price risk. However, homeowners' investment in houses is tied to the local home price indices, which inherently are more volatile than the national home price index. They aimed to hedge more volatile local indexes against less volatile (national) ones.

To do so, we would want to examine the theory using three types of analyses in three phases to test the hypothesis.

1. We used zip and metro level data to analyze the applicability of Modern Portfolio Theory (MPT) in the risk reduction of home value indices. Our principal finding was that larger random pools (20,40,60, etc) result in a less volatile pool index and from that larger random pools, we looked for an optimal pool that would result in less risk. That was a classic portfolio optimization

2. We forecasted the home value index 24 months in the future using the time series and neural network models (ARIMA and LSTM models) without any macroeconomic variables.

3. We also tried maximizing Sharpe Ratio, but the minimum volatility index gave us a better insight into risk reduction. This combined with the forecasts of HVIs from phase I and II of the project, could be used to identify which set of customers should be sought to result in a pool of least risk over the duration of forecasted values. We believe adding more business constraints to this optimization problem will help the company with their initial customer acquisition strategy.

Programming Language: Python

Tool: R

Models: MPT (random pools), Standard Deviation (SD), ARIMA, Long Short Term Memory (LSTM), Sharpe Ratio Index, Minimum Volatility Index

Project | 03

Farmers Insurance Recommendation

Farmer's insurance spent enormous money on advertising. They didn't know where their ads landed in which timeslots nor understand their target audiences' behavior.

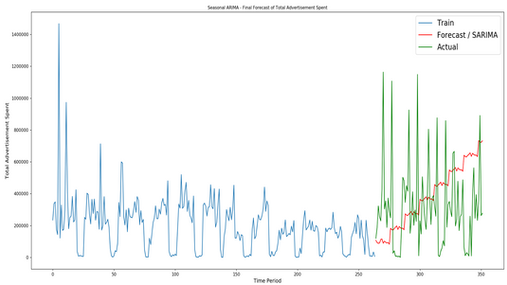

Therefore, I built insights on spending by airing shares, spending shares and viewerships on each network and which timeslots (prime time, weekend, late fringe). Second, I trained and tested the SARIMA to predict advertisement spending for the next 6 months using historical data.

To combine insights and prediction, I drew recommendations to Farmers Insurance on cutting prime time spending in half and used those half in the weekend and late fringe to capture broader audiences.

Programming Language: Python

Tool: Tableau, Prezi

Model: SARIMA

Project | 04

Predicting Divorces Using Machine Learning Methods

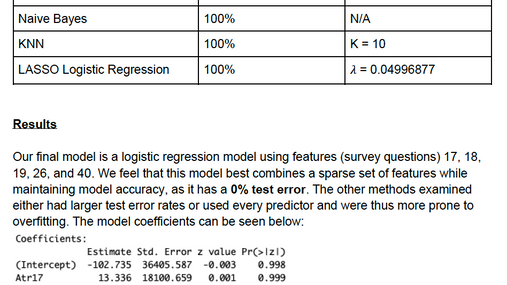

Divorce has increased drastically in the last 10-15 years. Our team wanted to examine if there are patterns in divorce in couples' survey questions. We aimed to establish supervised machine-learning models in forecasting couples, whether they are married or divorced using their responses from 54 survey questions.

In the process, we could identify their relationships using best mean square error (MSE) as a standard in all models. The models were:

1. LDA

2. Naive Bayes

3. KNN

4. LASSO Logistic Regression

The results were that 5 out of 54 questions that were the most effective for predicting divorce.

Tool: R

Model: Naive Bayes, Linear Discriminant Analysis (LDA), kNN, Logistic Regression, LASSO

Project | 05

Statistical Analyses on Roger Federer's Performances in 2013 and 2014

Tennis has become a global sport during and post-pandemic. I am a big fan of tennis. I used to rank no. 1 in Hong Kong (HK) and represented HK Tennis Team to compete in various junior tournaments including World Junior Tennis, Junior Davis Cup and China National Youth Games. Roger Federer is my favorite tennis player. He went through injuries and changed rackets.

I would like to examine Roger Federer's performances in 2013 and 2014. I chose those two years since in 2013. He played through the year injured versus in 2014, he was back in full health. I would love to know which areas of his games improved the most on which grand slams and surface. I would use a combination of analyses and visualizations to look at his winning percentages per year, surface, and grand slams.

The final results were that Federer switched to a new racket and back to normal health so that he had a significant better 2014 than 2013 based on the analyses.

Tool: R

Model: Linear Regression, explanatory data analysis

Project | 06

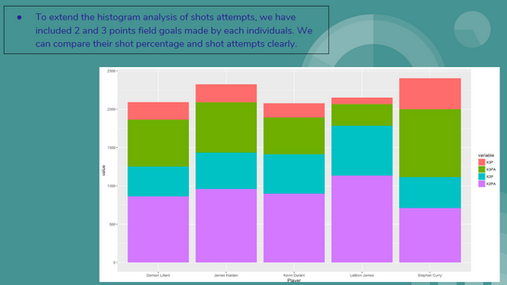

Compare NBA Players with LeBron James using Statistical Analyses from 2007 - 2017

LeBron James is arguably the greatest player of all time. To test that statement, we would like to measure and predict his position efficiency and statistics and compare to the rest of the NBA, past and present players from 2007 - 2017.

We used a combination of machine-learning models and visualizations to examine that if LeBron James is arguably the greatest player of all time (GOAT). We used a various techniques including:

1. Decision Tree

2. Random Forest

3. LDA

4. KNN

5. Regression

The results were LeBron James is the greatest player of all time using two variables - offensive winning shares (OWS) and defensive winning shares (DWS).

Tool: R, Prezi

Model: Explanatory Data Analysis (EDA), Decision Tree, Random Forest, LDA, 3D scatterplot with regression plane, kNN

To see more or discuss possible work let's talk >>

Follow me

© 2024 by James Kong

Call

C: 604-313-6221

Contact